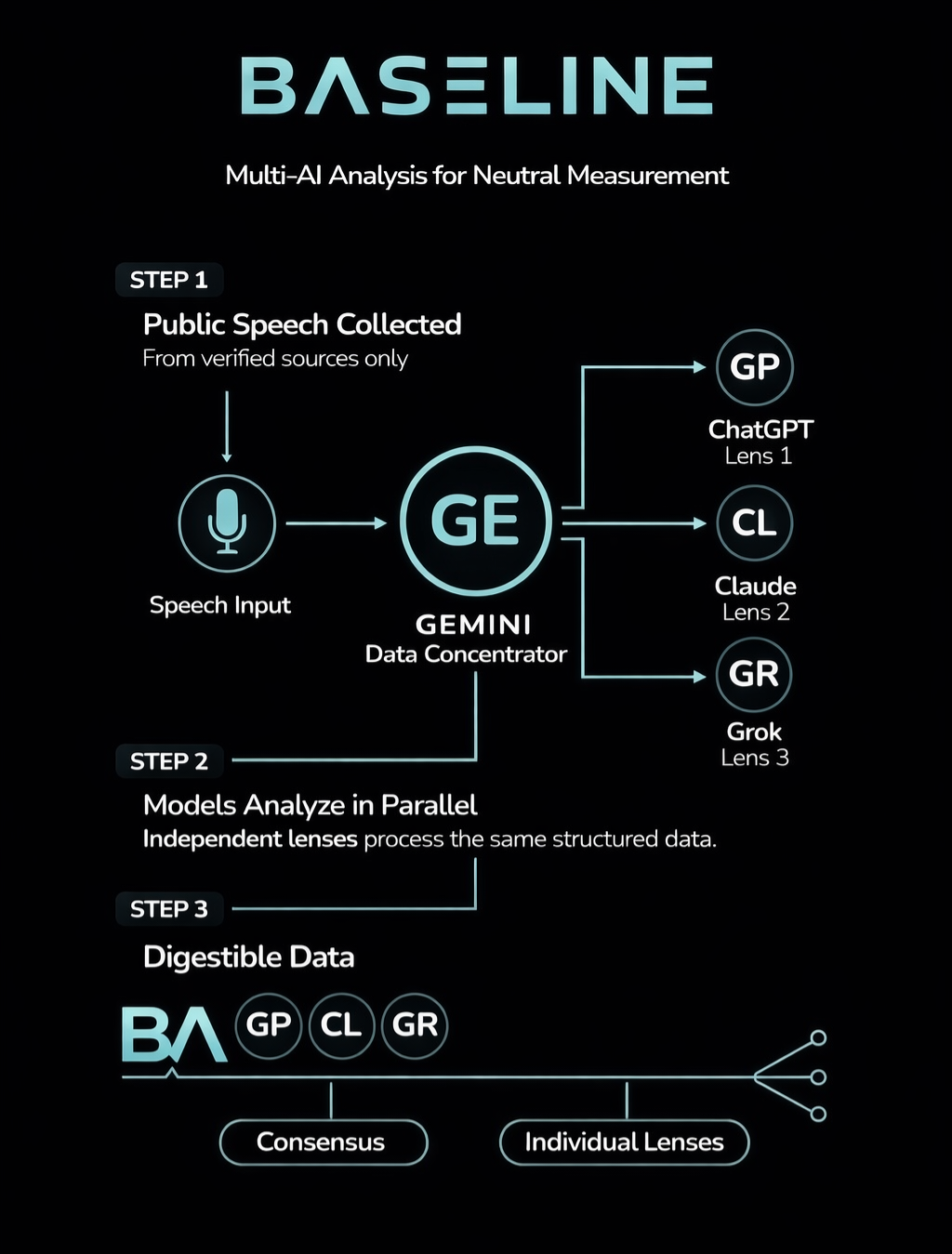

How it works

MethodologyBaseline displays independent outputs side-by-side, then computes a separate consensus layer. Sources and context are always shown. Observational analysis only. Not a fact-check.

Identical input is processed independently by three AI systems. Outputs are displayed as returned, without editorial rewriting.

A compact readout of recurring language patterns over time, with match counts shown by tier.

A measurement surface for rhetorical structure across five framing dimensions.

Side-by-side lens outputs, plus a separate consensus layer for shared patterns and variance.

What We Measure

Every statement is processed through multiple measurement layers. Each metric is computed independently — no metric influences another.

Signal Metrics

Four independent measurements computed per statement by each AI model. Displayed on a 0–100 scale with no thresholds or labels.

How closely this statement’s language mirrors the figure’s prior statements on the same topic.

How much new language or framing this statement introduces compared to prior patterns.

The rate of emotionally charged language — intensity markers, urgency signals, sentiment-loaded phrasing.

Topical spread of the statement. Higher values indicate multiple subjects; lower values indicate tight focus.

When models converge on similar measurements, the consensus count is displayed. When they diverge, variance is flagged.

Each model processes the statement separately — none can see the others' results. Convergence is computed after all three models have returned their independent outputs.

When models produce significantly different framing classifications for the same statement, a variance banner appears. This is not an error — it reflects genuine measurement divergence.

Congressional Vote Record

For congressional figures, voting records are tracked separately from speech metrics. Votes are displayed as “recorded” or “not recorded” — never color-coded by approval.

Teal = vote recorded • Gray = not recorded • Never red/green

Three-Model Pipeline

Every statement flows through three independent AI models. Each model receives identical input and returns its own measurements. No model can see another's output.

Independent measurement from the first AI system.

Independent measurement from the second AI system.

Independent measurement from the third AI system.

Product surfaces

Representative measurement surfaces from the app.